What is ChatGPT?

Have you ever Hunted for answers to your daily questions?

Yeah, you thought correctly. Meet ChatGPT, an AI-powered tool that can answer anything you could think of. With limitless possibilities and amazing accuracy. ChatGPT is a professional, mobile-friendly tool designed to help you get the answers you need. It can answer anything from what your favourite team won last game to how to become a billionaire. It's no wonder ChatGPT has been rated top 5 powerful Android Market apps! ChatGPT is a tool that lets you quickly answer anything without having to waste time searching for answers got a question about the weather. ChatGPT has an answer for you. Need to know if your credit card will charge the correct amount or not? ChatGPT can help you save time from having to manually check on those details.

Recently, OpenAI released ChatGPT, a new language model which is an improved version of GPT-3 and, possibly, gives us a peek into what GPT-4 will be capable of when it is released early next year (as is rumoured). With ChatGPT it is possible to have an actual conversation with the model, referring back to previous points in the conversation.

How we can use ChatGPT?

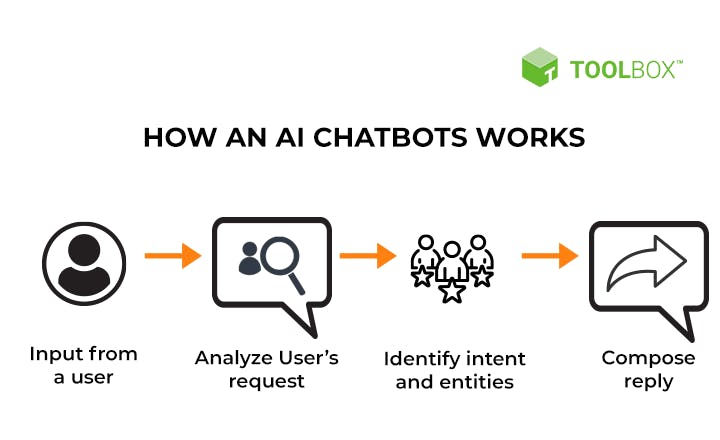

It can "speaks" your language and can answer any question, including technical jargon or questions about URLs, phone numbers and many more. It also has a chatbot live feed of ongoing conversations from other chatbots on Facebook messenger. It collects information from a variety of sources and answers your questions with confidence to provide "real human" support. Users can type in a question or a task, and the software will pull information from billions of examples of text from across the Internet, to come up with a response designed to mimic a human

Which technology is used in ChatGPT?

ChatGPT is fine-tuned from a model in the GPT-3.5 series, which finished training in early 2022. ChatGPT and GPT 3.5 were trained on an Azure AI supercomputing infrastructure. OpenAI trained this model using Reinforcement Learning from Human Feedback (RLHF), using the same methods as InstructGPT, but with slight differences in the data collection setup. They trained an initial model using supervised fine-tuning: human AI trainers provided conversations in which they played both sides—the user and an AI assistant. They gave the trainers access to model-written suggestions to help them compose their responses.

To create a reward model for reinforcement learning, they needed to collect comparison data, which consisted of two or more model responses ranked by quality. To collect this data, they took conversations that AI trainers had with the chatbot. they randomly selected a model-written message, sampled several alternative completions, and had AI trainers rank them. Using these reward models, they can fine-tune the model using Proximal Policy Optimization. They performed several iterations of this process.

Limitations of ChatGTP.

ChatGPT sometimes writes plausible-sounding but incorrect or nonsensical answers. Fixing this issue is challenging, as:

(1) during RL training, there’s currently no source of truth;

(2) training the model to be more cautious causes it to decline questions that it can answer correctly; and

(3) supervised training misleads the model because the ideal answer depends on what the model knows, rather than what the human demonstrator knows.

ChatGPT is sensitive to tweaks to the input phrasing or attempting the same prompt multiple times. For example, given one phrasing of a question, the model can claim to not know the answer, but given a slight rephrase, can answer correctly. The model is often excessively verbose and overuses certain phrases, such as restating that it’s a language model trained by OpenAI. These issues arise from biases in the training data (trainers prefer longer answers that look more comprehensive) and well-known over-optimization issues.12 Ideally, the model would ask clarifying questions when the user provided an ambiguous query. Instead, our current models usually guess what the user intended. While we’ve made efforts to make the model refuse inappropriate requests, it will sometimes respond to harmful instructions or exhibit biased behaviour. We’re using the Moderation API to warn or block certain types of unsafe content, but we expect it to have some false negatives and positives for now. We’re eager to collect user feedback to aid our ongoing work to improve this system.

Will it be the end of coding interviews?

In my opinion, I don't think that it will end the coding interviews it will help students to code much faster and more effectively. Let's hear this question from his own mouth